A funny thing happened yesterday: I've just merged my ranch with code coverage reports for my project with PlatformIO, opened Twitter to tweet about it, and precisely at that moment, PlatformIO themselves posted their own blog post!

Of course, I found this coincidence exciting and decided to tell my story with an accent on automated unit testing and code coverage reports with GitHub Actions.

Shameless plug

This article is written as a result of SenseShift project development. If you are a fan of Virtual Reality and Open Source, consider checking it out: SenseShift is an open-sourced firmware for the VR Accessories ecosystem. With SenseShift you can build yourself VR Haptic Vests, Haptic Gloves, and much more!

Preparing the project

Since we will run our tests and collect their coverage in GitHub actions, we are limited to the Native platform and Local Test Runner, and we need to correctly structurize and organize the project by splitting the source code into multiple components and placing them into the lib_dir. Otherwise, tests would not compile since the Arduino library is incompatible with the Native platform.

I'm aware of the ArduinoFake library existence, but it is not the topic of this article.

It took me quite some time to correctly prepare my project, but in the end, I successfully decoupled abstract "core" methods and classes from hardware-dependant ones.

Once I've successfully run tests locally, it is time to collect the baseline for future coverage reports.

Collecting initial coverage for the firmware

To correctly calculate the coverage percentage for the project, we need to collect initial coverage for the firmware. It is essential because we need to compare test coverage with files, classes, and functions that are actually used. We can collect initial coverage by compiling binaries with the following build flags: -lgcov --coverage.

Compiling a project with these flags will generate .gcno files containing information about which source code lines were used for project compilation.

However, in my case, a compilation failed with the region 'dram0_0_seg' overflowed by 64368 bytes error. A simple workaround is available to prevent this issue: by modifying the line len = 0x2c200 - 0xdb5c in the ~/.platformio/packages/framework-arduinoespressif32/tools/sdk/esp32/ld/memory.ld file to len = 289888, we can force firmware to compile successfully.

sed -i "s/len\s=\s0x2c200\s-\s0xdb5c/len = 289888/" ~/.platformio/packages/framework-arduinoespressif32/tools/sdk/esp32/ld/memory.ld

However, it will not work on the actual MCU, and it even can damage the hardware! Do not try to flash the firmware compiled with these changes!

Do not try to flash firmware with modifyed memory segments!

Generating lcov file for initial coverage

The initial lcov.info file is usually generated with the lcov -i -d ./build/binaries -c -o ./build/lcov/lcov.info.initial command: adding an -i flag marks it as "initial" and does not require gcda files. But for the Espressif compiler, we need to slightly modify it by adding a custom gcov-tool:

pio run -e default

lcov -i -d ./.pio/build/default/ -c -o ./build/lcov/lcov.info.initial -gcov-tool ~/.platformio/packages/toolchain-xtensa-esp32/bin/xtensa-esp32-elf-gcov

Running tests and collecting covered lines

We have already added -lgcov --coverage flags previously, so compiling tests will generate gcno files, and running them will produce gcda files. Now we need to process them and generate lcov.info files by running the same command as before, but now without -i flag:

pio test -e native

lcov -d ./.pio/build/native/ -c -o ./build/lcov/lcov.info.test

Merging and cleaning up results

Now that we finally have both initial and test coverage, it is time to merge them to calculate final coverage:

# Merge coverage

lcov -o ./build/lcov/lcov.info -a ./build/lcov/lcov.info.initial -a ./build/lcov/lcov.info.test

# Clean up libraries

lcov --remove ./build/lcov/lcov.info '/usr/include/*' '*.platformio/*' '*/.pio/*' '*/tool-unity/*' '*/test/*' '*/MockArduino/*' -o ./build/lcov/lcov.info.cleaned

Running this command will generate the final coverage file and remove all libraries that do not directly belong to the project.

Putting all together and automating with GitHub Actions.

Now when we finally know all the required steps, we can write a simple script that also can be automated:

# Add build flags

sed -i '' '/build_flags\s*=/p; s/build_flags\s*=/-lgcov --coverage/' platformio.ini

# Modify memory segments

sed -i "s/len\s=\s0x2c200\s-\s0xdb5c/len = 289888/" ~/.platformio/packages/framework-arduinoespressif32/tools/sdk/esp32/ld/memory.ld

# Build binaries

pio run --environment default

# Collect initial coverage

lcov -i -d ./.pio/build/default/ -c -o ./build/lcov/lcov.info.initial -gcov-tool ~/.platformio/packages/toolchain-xtensa-esp32/bin/xtensa-esp32-elf-gcov

# Running tests

pio test -e native

# Collect test coverage

lcov -d ./.pio/build/native/ -c -o ./build/lcov/lcov.info.test

# Merge coverage

lcov -o ./build/lcov/lcov.info -a ./build/lcov/lcov.info.initial -a ./build/lcov/lcov.info.test

# Clean up libraries

lcov --remove ./build/lcov/lcov.info '/usr/include/*' '*.platformio/*' '*/.pio/*' '*/tool-unity/*' '*/test/*' '*/MockArduino/*' -o ./build/lcov/lcov.info.cleaned

# Generate HTML report

genhtml -p $PWD -o ./build/coverage/ --demangle-cpp ./build/lcov/lcov.info.cleaned

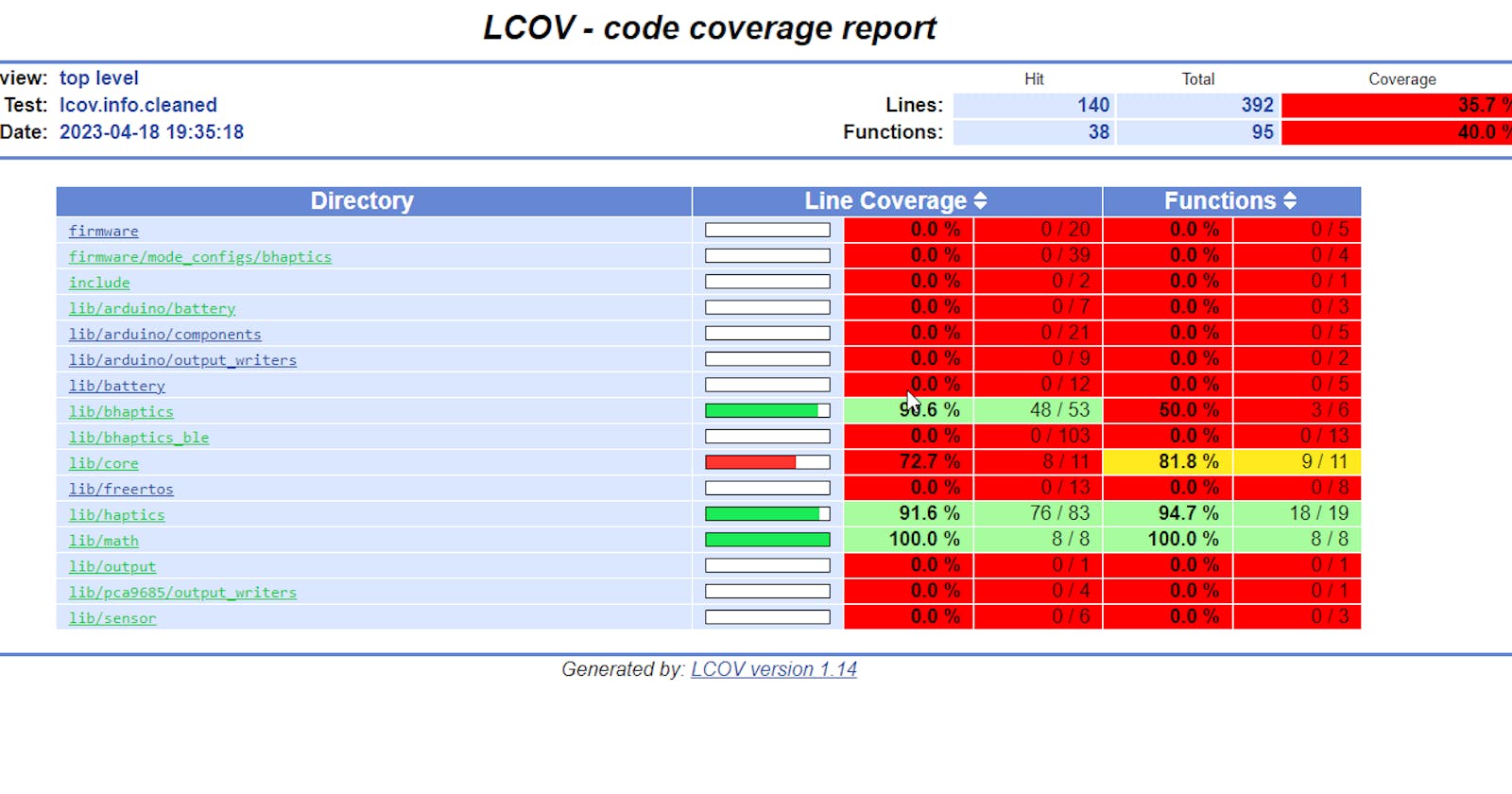

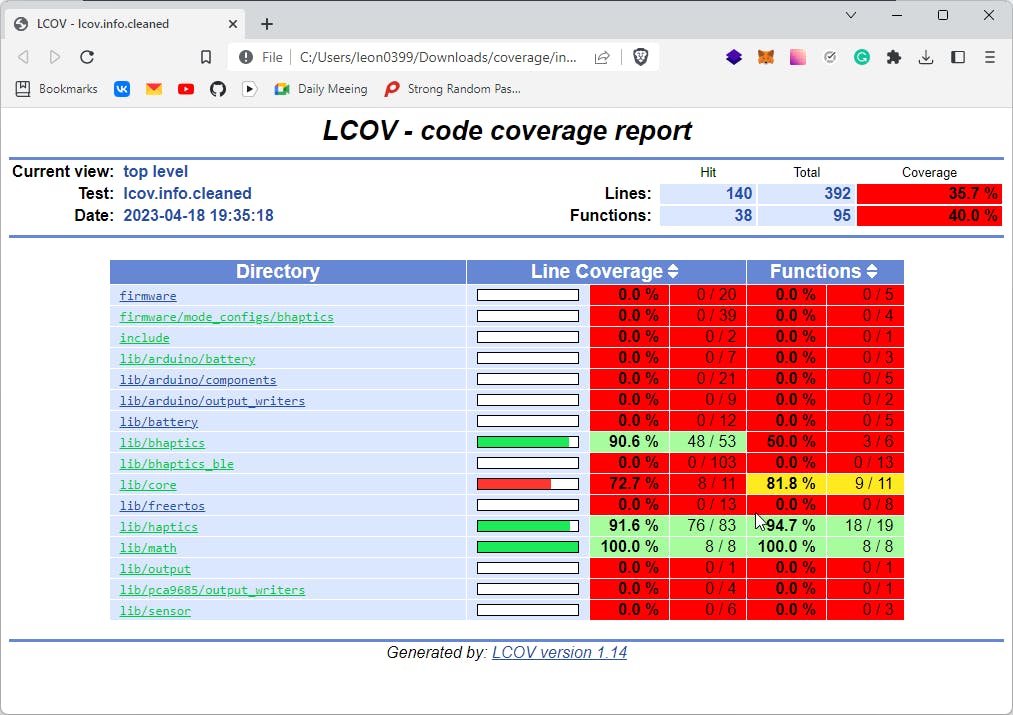

As a bonus step, with the last command, we also generate a fancy HTML web report with easily-understandable coverage information:

Automating with GitHub Actions

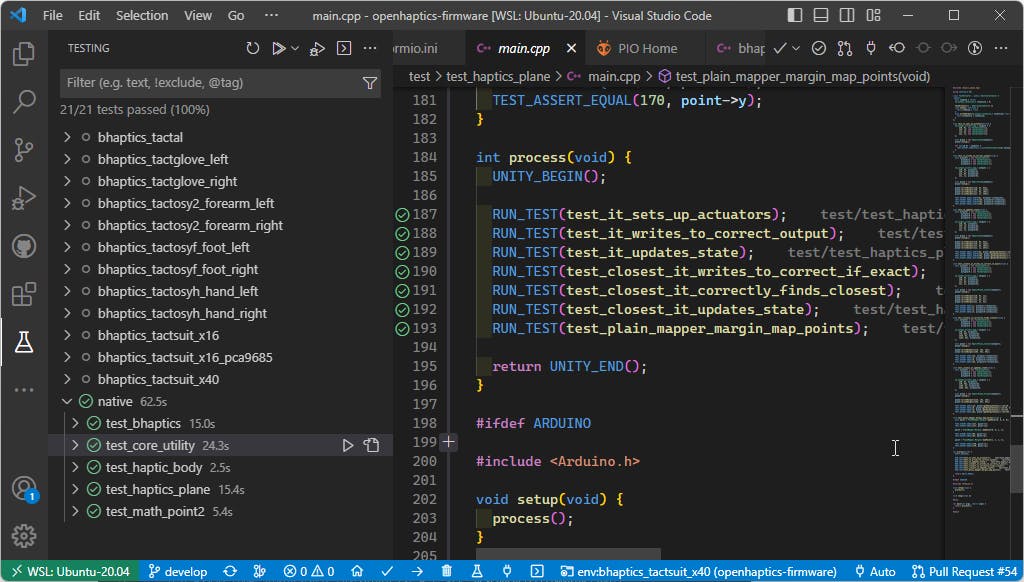

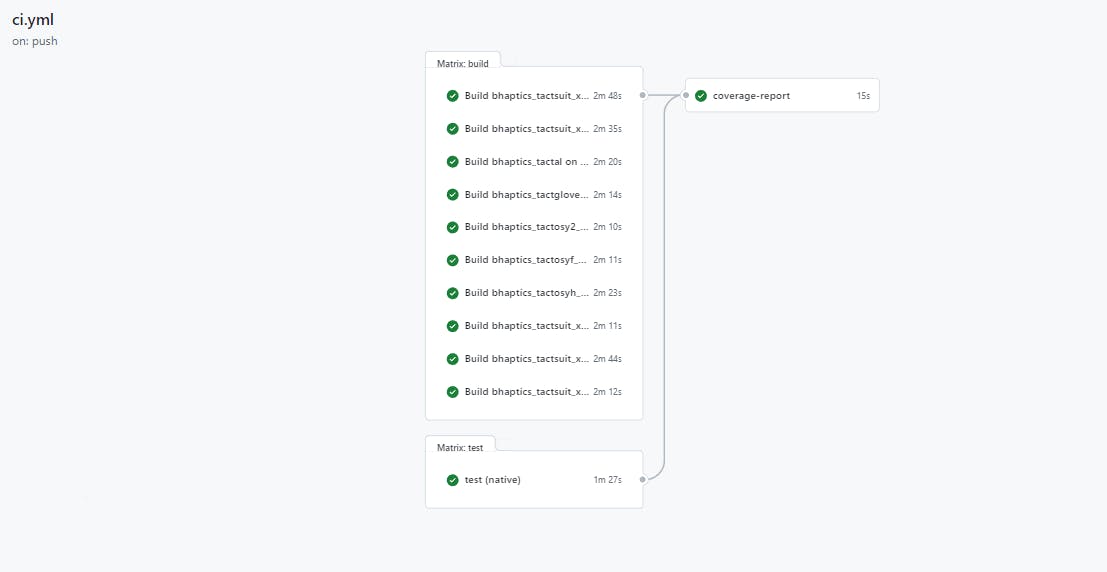

My pipeline is actually quite complex and consists of multiple environments with different configurations, and I used matrixes to compile and test multiple targets simultaneously. You can find the final result in the repository:

But in the end, it produces a comment to Pull Request with the final coverage:

In conclusion, automated unit testing and code coverage reports are essential for maintaining the quality of a project. With the help of GitHub Actions, we can automate these processes and ensure that our code is thoroughly tested and properly covered. By following the steps outlined in this article, developers can easily incorporate these practices into their projects and benefit from the resulting improvements in code quality.